Join our WhatsApp Community

AI-powered WhatsApp community for insights, support, and real-time collaboration.

Small Language Models with Agentic AI bring private, secure intelligence that reasons, remembers, and acts—transforming enterprise AI from chatbots to thinkers.

| Why is AI important in the banking sector? | The shift from traditional in-person banking to online and mobile platforms has increased customer demand for instant, personalized service. |

| AI Virtual Assistants in Focus: | Banks are investing in AI-driven virtual assistants to create hyper-personalised, real-time solutions that improve customer experiences. |

| What is the top challenge of using AI in banking? | Inefficiencies like higher Average Handling Time (AHT), lack of real-time data, and limited personalization hinder existing customer service strategies. |

| Limits of Traditional Automation: | Automated systems need more nuanced queries, making them less effective for high-value customers with complex needs. |

| What are the benefits of AI chatbots in Banking? | AI virtual assistants enhance efficiency, reduce operational costs, and empower CSRs by handling repetitive tasks and offering personalized interactions. |

| Future Outlook of AI-enabled Virtual Assistants: | AI will transform the role of CSRs into more strategic, relationship-focused positions while continuing to elevate the customer experience in banking. |

For the last two years, the world has been fixated on large language models — ChatGPT, Claude, Gemini, you name it.

They wowed everyone with scale, but scale isn’t sovereignty.

Enterprises in banking, healthcare, and government quickly realized something: they can’t outsource their intelligence layer to a public API. Every query, every inference, every memory — it all sits outside their control.

That’s where the new wave begins: Private Intelligence.

Instead of shipping sensitive data to massive cloud models, organizations are bringing intelligence in-house — building AI systems that live, reason, and evolve entirely within their own infrastructure.

And the foundation of that revolution? Small Language Models — paired with Agentic AI reasoning.

For a deeper look into how executives are planning this transition, explore the CFO Playbook for Agentic Intelligence

Here’s the truth: the size of a model matters less than its context and control.

Small Language Models (SLMs) are compact, fine-tuned systems designed to run efficiently on local hardware — without sacrificing reasoning quality for the use case at hand.

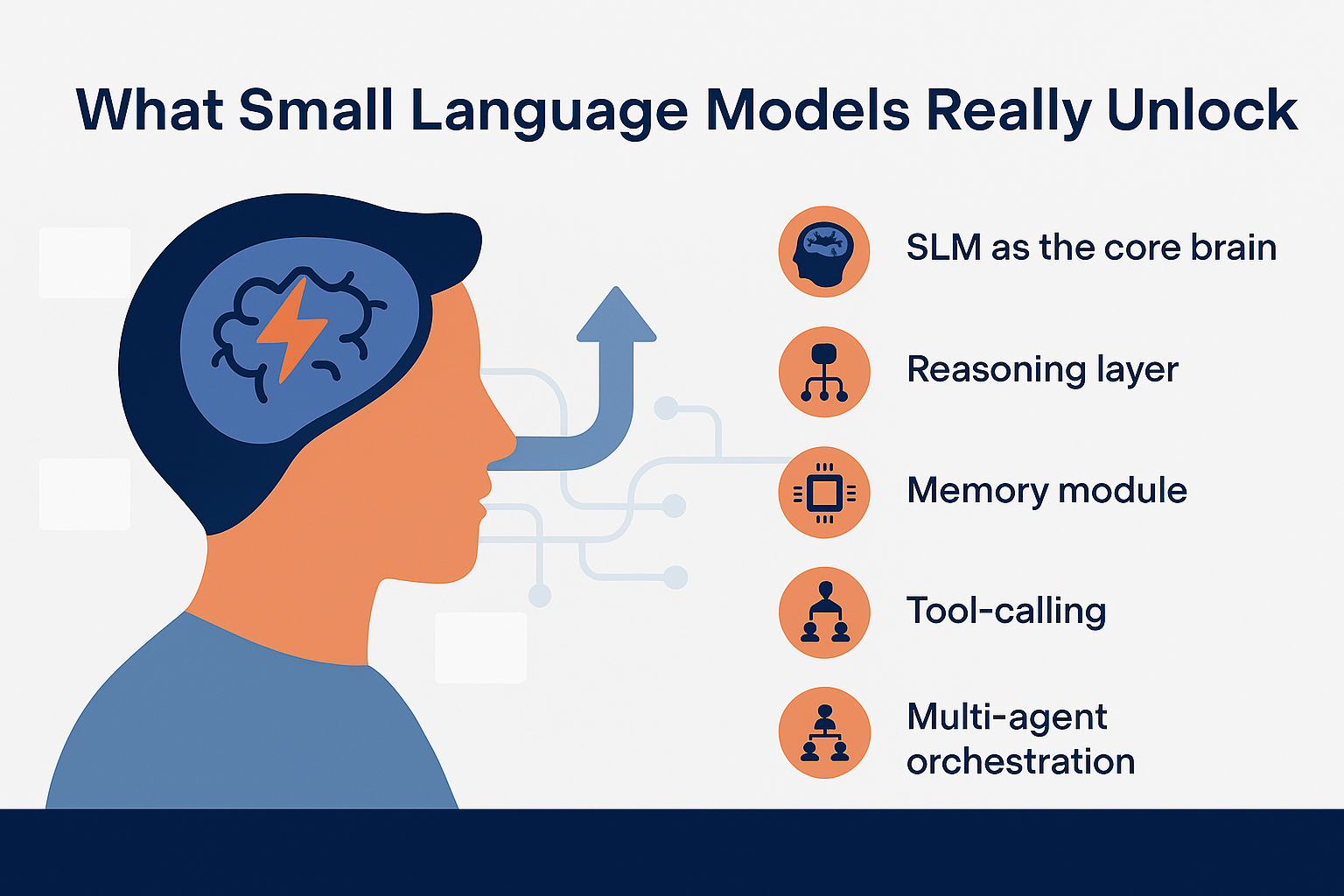

Think of them as:

SLMs thrive when connected to real enterprise data.

They become exponentially more powerful when an Agentic layer wraps around them — giving them the ability to not just generate, but reason, remember, and act.

A Large Language Model can give you a smart answer.

An Agentic AI system can use that answer to actually do something.

That’s the key difference.

Here’s how the stack evolves inside your enterprise:

That’s not chatbot intelligence — that’s operational intelligence.

It’s AI that behaves like a team — not a text generator.

Want to measure success when adopting such systems? Read the KPI Blueprint for Agentic AI Success

Most leaders already sense this tension:

You want intelligence everywhere — but you can’t afford it anywhere.

Cloud-hosted LLMs create undeniable exposure points:

When the model itself lives inside your infrastructure, all those barriers dissolve.

That’s why the most forward-thinking enterprises are pivoting to SLMs + Agentic AI — a stack that’s intelligent, auditable, and sovereign by design.

Let’s visualize it inside your firewall:

So when someone asks,

“What’s the risk exposure of our retail loan portfolio under new RBI guidelines?”

The system doesn’t just summarize PDFs — it connects to internal risk dashboards, applies the new circular logic, and produces a compliance-ready report — all locally, without a single byte leaving your environment.

That’s not automation. That’s Agentic cognition.

For architecture-level insights into how these systems are deployed, explore the Fluid AI Architecture.

Most assume that only billion-parameter models can do meaningful work. Not anymore.

SLMs today, especially when powered by reasoning agents, outperform larger models in focused enterprise tasks because they:

Paired with Agentic reasoning, these smaller models become adaptive ecosystems — thinking entities that can handle complex workflows like loan restructuring, claim adjudication, or policy enforcement.

They’re not just smaller. They’re strategically local.

Agentic AI adds what’s been missing from corporate automation — intent, memory, and decision accountability.

That’s crucial for industries where a single wrong decision can cost millions — or violate regulations.

Agentic AI doesn’t just execute tasks — it understands processes.

It can explain its reasoning, flag anomalies, and collaborate with human operators as a digital colleague, not a black box.

At Fluid AI, we’ve built our entire platform around this exact vision — sovereign intelligence for regulated enterprises.

Our systems combine:

The result?

A self-contained AI ecosystem that functions like ChatGPT for your organization — but one that doesn’t compromise your sovereignty, security, or compliance.

The move toward private intelligence isn’t just a tech trend — it’s a regulatory inevitability.

As data laws tighten and LLMs get embedded into decision systems, enterprises will have no choice but to own their AI stack.

And ownership means one thing: sovereignty.

SLMs with Agentic intelligence represent the middle ground — flexible, powerful, yet contained.

They let organizations build systems that don’t just react — they reason, decide, and evolve.

That’s the true future of enterprise AI: not another model subscription, but a thinking system you control.

To understand where on-prem vs. cloud AI fits into this shift, check out Edge vs. Cloud: Where Should Your Voice AI Be?.

We’re entering the age of Private Intelligence — where the smartest AI systems don’t live on the internet, but inside your enterprise.

They won’t just answer questions.

They’ll manage workflows, monitor compliance, and drive decisions — all autonomously, all securely, and all within your walls.

Because in this new world, intelligence isn’t rented.

It’s sovereign.

And that’s where Fluid AI leads — delivering Agentic AI sovereignty, powered by Small Language Models that never leave your firewall.

Fluid AI is an AI company based in Mumbai. We help organizations kickstart their AI journey. If you’re seeking a solution for your organization to enhance customer support, boost employee productivity and make the most of your organization’s data, look no further.

Take the first step on this exciting journey by booking a Free Discovery Call with us today and let us help you make your organization future-ready and unlock the full potential of AI for your organization.

AI-powered WhatsApp community for insights, support, and real-time collaboration.

.webp)

.webp)

Join leading businesses using the

Agentic AI Platform to drive efficiency, innovation, and growth.

AI-powered WhatsApp community for insights, support, and real-time collaboration.