Join our WhatsApp Community

AI-powered WhatsApp community for insights, support, and real-time collaboration.

Bigger brains won’t save AI—smarter memory will. Agentic AI is killing chatbots, and memory-first systems are the real upgrade nobody saw coming.

| Why is AI important in the banking sector? | The shift from traditional in-person banking to online and mobile platforms has increased customer demand for instant, personalized service. |

| AI Virtual Assistants in Focus: | Banks are investing in AI-driven virtual assistants to create hyper-personalised, real-time solutions that improve customer experiences. |

| What is the top challenge of using AI in banking? | Inefficiencies like higher Average Handling Time (AHT), lack of real-time data, and limited personalization hinder existing customer service strategies. |

| Limits of Traditional Automation: | Automated systems need more nuanced queries, making them less effective for high-value customers with complex needs. |

| What are the benefits of AI chatbots in Banking? | AI virtual assistants enhance efficiency, reduce operational costs, and empower CSRs by handling repetitive tasks and offering personalized interactions. |

| Future Outlook of AI-enabled Virtual Assistants: | AI will transform the role of CSRs into more strategic, relationship-focused positions while continuing to elevate the customer experience in banking. |

The current AI landscape is obsessed with model size. From GPT-3 to GPT-4 to whatever comes next, the narrative has centered around larger models offering improved results. But enterprise deployment has revealed a more nuanced truth: performance bottlenecks are increasingly caused by context limitations, not model intelligence.

Bigger models don’t inherently enable long-term memory, multi-step task execution, or persistent user understanding. In short, they don’t make an AI agent more autonomous—they just make it more verbose. For Agentic AI to work at scale, we don’t just need stronger LLMs. We need smarter memory systems.

This blog explores how memory is becoming the backbone of intelligent agents and why Fluid AI is betting on context, not just computation.

If you’re still evaluating AI performance using outdated benchmarks, you may want to rethink how you measure success in 2025.

Contextual intelligence is rapidly overtaking raw parameter count as the true differentiator.

Agentic AI refers to systems that can reason, act, and adapt autonomously. Unlike single-turn chatbots, these agents need to remember past actions, assess evolving contexts, and make informed decisions based on cumulative knowledge.

At its core, memory is what transforms an LLM-powered assistant into an agent. It enables:

Without memory, even the most powerful model is blind to history and unable to evolve.

Additionally, memory enables agents to operate under different levels of autonomy. Some agents may require supervision, while others—equipped with robust memory systems—can act independently. This spectrum of autonomy is crucial for customizing enterprise applications based on domain complexity and risk tolerance.

For a deeper dive into why autonomous agents—not just assistants—are critical for the modern enterprise, read Your Enterprise Needs an Agent.

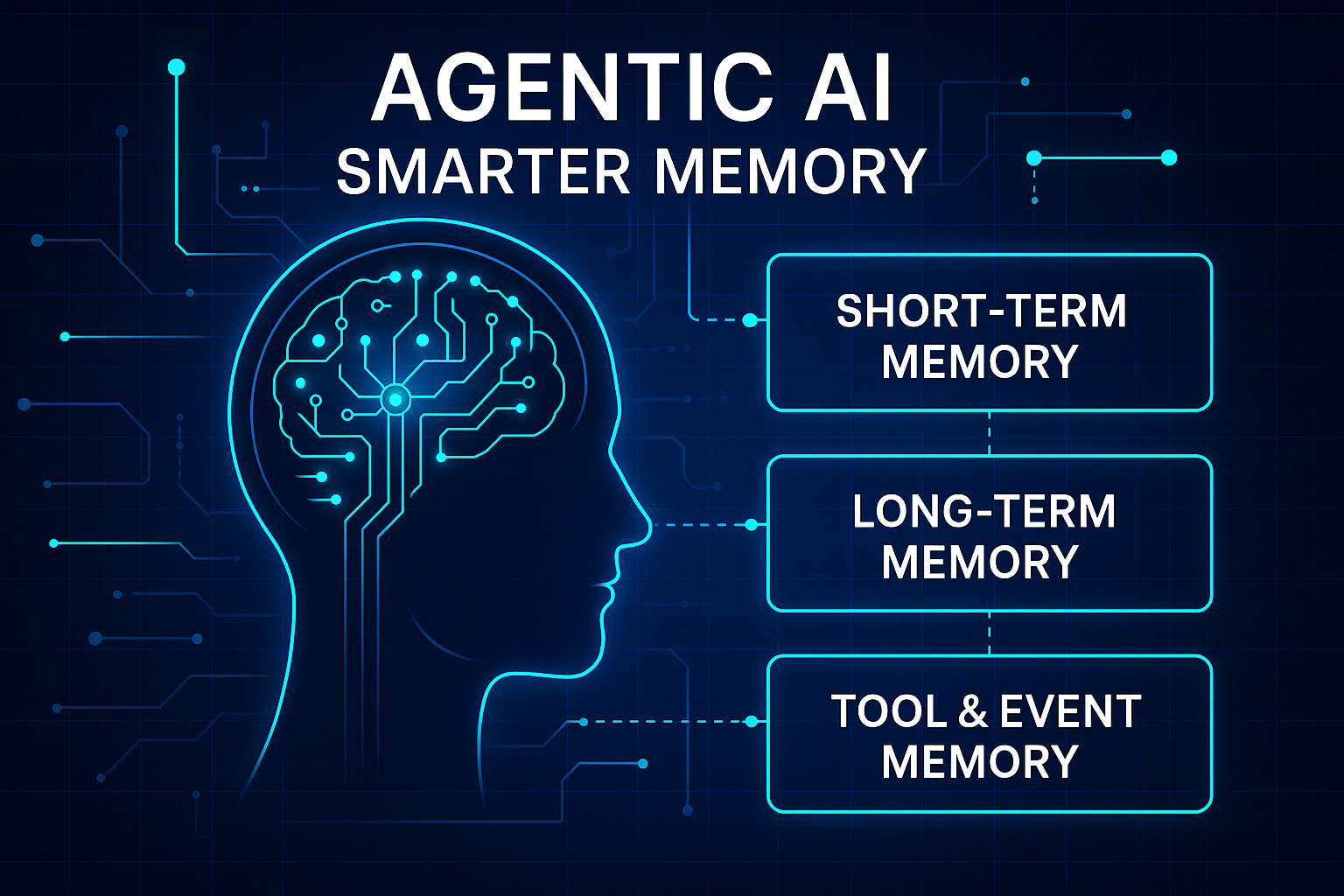

To support complex enterprise tasks, Agentic AI requires a structured approach to memory. At Fluid AI, we implement a multi-layered memory architecture:

These layers combine to form a persistent and dynamic understanding of the agent’s environment and history.

The interplay between these layers is vital. For example, short-term memory enables smooth turn-by-turn interactions, while long-term memory anchors decisions to strategic objectives. Event memory ties both together with execution context.

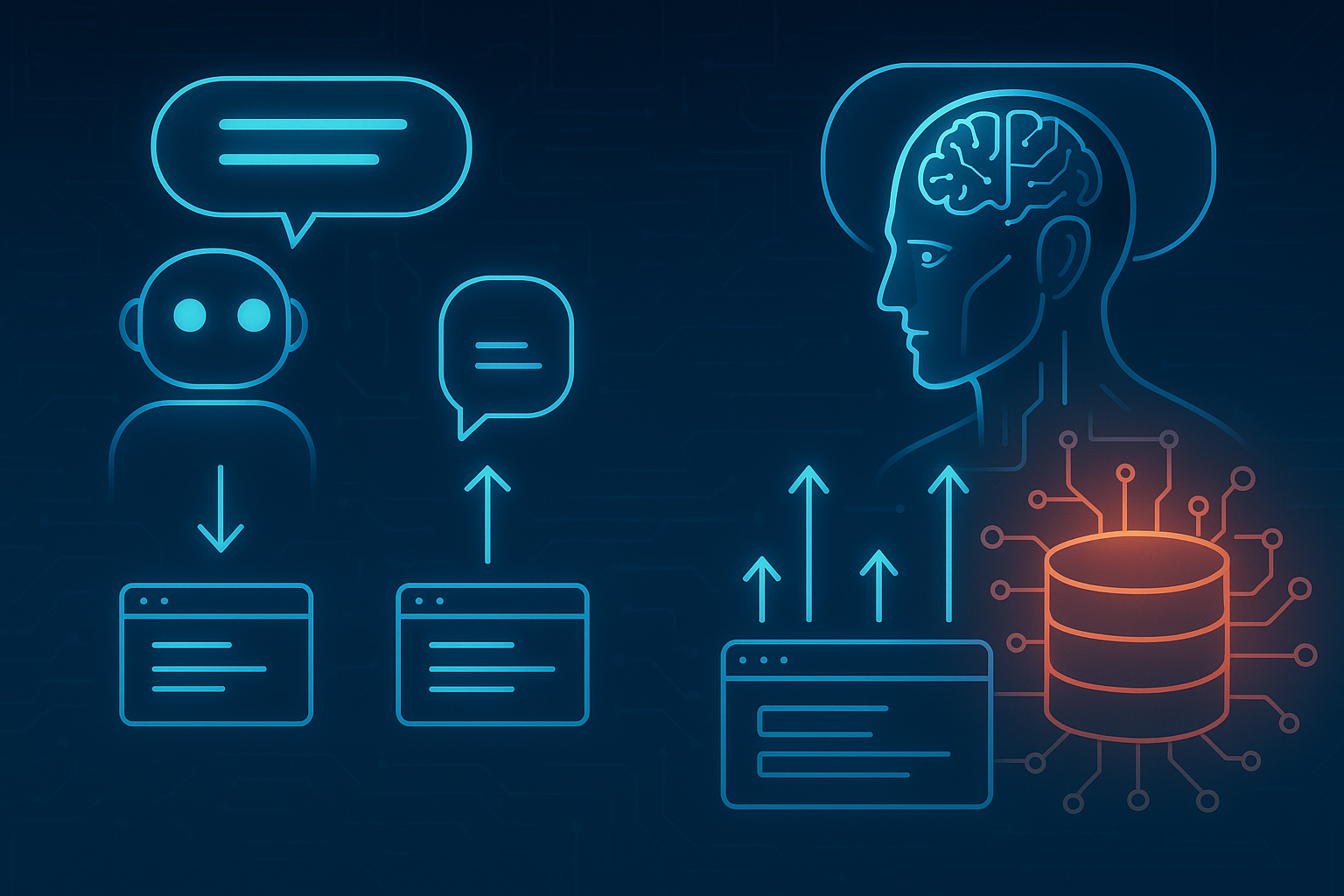

Most traditional chatbots are stateless. They handle one prompt at a time, and their understanding resets with each new user input. Even when enhanced with session memory, they lack the structure and depth to:

In contrast, an Agentic AI system with memory can:

This is a fundamental shift from "reactive response" to "context-driven reasoning."

This also allows organizations to reduce cognitive friction across channels. Whether a customer engages via email or voice, agents equipped with shared memory can provide consistent and informed service. We’ve explored how AI agents are already redefining business intelligence by leveraging memory and reasoning—not just rules and reports.

At Fluid AI, we design our platform to treat memory as a first-class citizen. Our approach is built on three core pillars:

This enables a system that doesn’t just store information but understands and retrieves it meaningfully.

This creates a robust and secure memory-sharing architecture across the Fluid AI ecosystem.

Additionally, these capabilities make memory modular and scalable. Developers can plug memory components into different workflows without building from scratch.

Memory systems aren’t theoretical. They’re already transforming enterprise workflows. Some examples:

These aren’t just efficiencies—they’re enablers of entirely new operating models. To see how enterprises are already adopting such capabilities, visit AI Agents: Driving ROI and Actions.

In all these cases, memory turns automation into augmentation.

In traditional enterprise systems, memory lives in siloed databases, CRM logs, or human notes. In Agentic AI, memory becomes a live, query able layer that:

This requires a rethink of the enterprise AI stack:

Memory-first architecture is the only way to scale agent autonomy safely and effectively.

Just as cloud-native infrastructure changed how applications scale, memory-native AI will change how intelligence scales. Enterprises must think about memory not as a byproduct but as a product requirement.

As we move beyond prompt-centric LLM usage, the future of AI development will revolve around:

This shift will give rise to a new discipline: memory engineering, where developers and AI architects focus less on static prompts and more on dynamic context orchestration.

Much like database engineers became essential in traditional tech stacks, memory engineers will define how knowledge is organized, retrieved, and used by intelligent systems.

Enterprises that master memory will unlock AI agents that are not just capable of completing tasks but continuously improving at them.

We believe the next decade of AI will be defined not by the models we build, but by the memory they access. Agentic AI doesn’t just need to be smarter. It needs to be more aware of context, history, and evolving workflows.

At Fluid AI, we’re not just scaling intelligence. We’re scaling memory.

Because in the age of autonomous agents, context isn’t just helpful; it’s everything.

Fluid AI is an AI company based in Mumbai. We help organizations kickstart their AI journey. If you’re seeking a solution for your organization to enhance customer support, boost employee productivity and make the most of your organization’s data, look no further.

Take the first step on this exciting journey by booking a Free Discovery Call with us today and let us help you make your organization future-ready and unlock the full potential of AI for your organization.

AI-powered WhatsApp community for insights, support, and real-time collaboration.

.webp)

.webp)

Join leading businesses using the

Agentic AI Platform to drive efficiency, innovation, and growth.

AI-powered WhatsApp community for insights, support, and real-time collaboration.