Join our WhatsApp Community

AI-powered WhatsApp community for insights, support, and real-time collaboration.

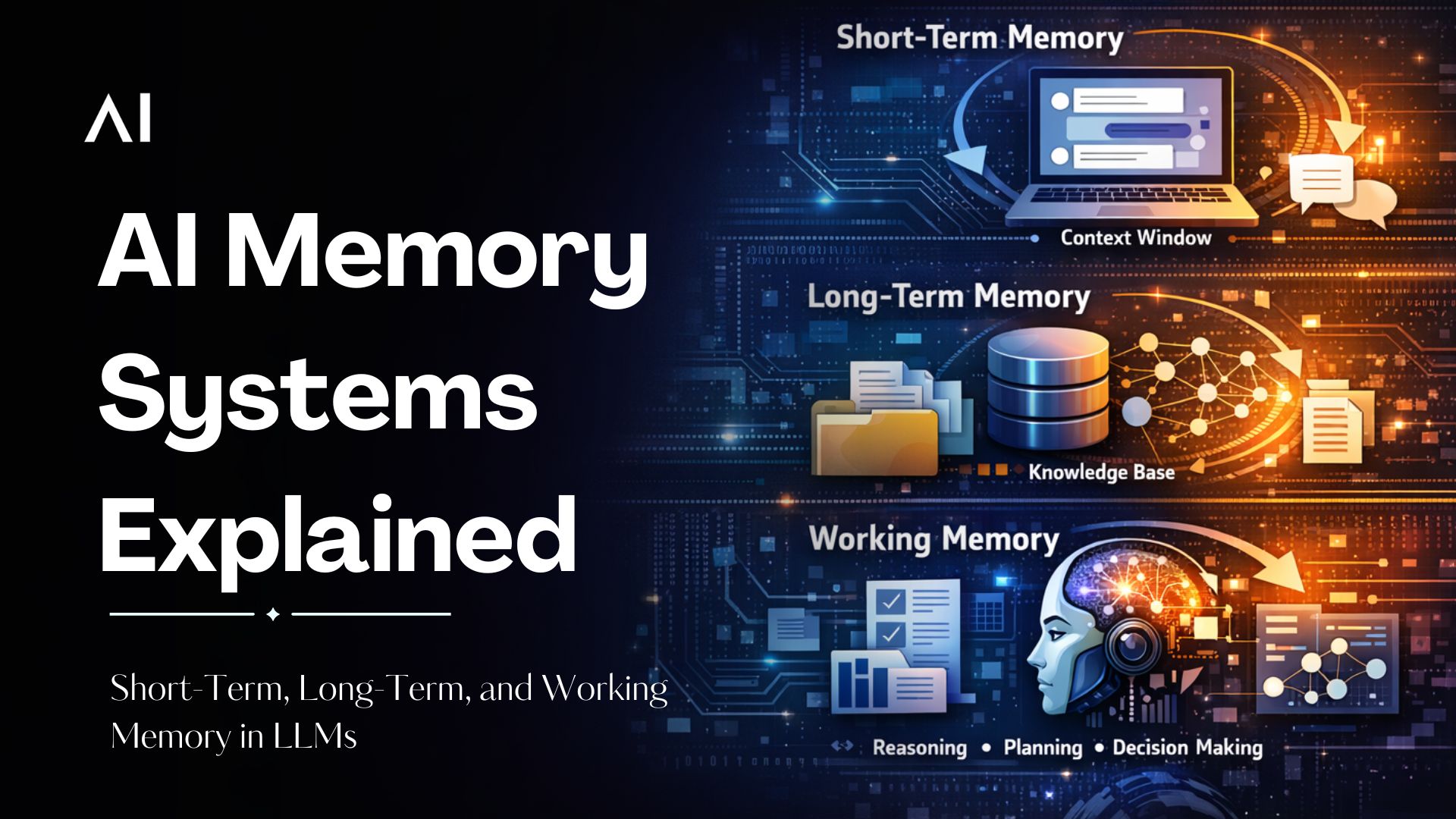

Learn how LLM memory works, including short-term, long-term, and working memory in AI agents. Discover how memory reduces hallucinations and enables enterprise AI. Read now!

LLM memory is what enables modern AI systems to move beyond stateless responses and deliver context-aware, reliable interactions. This guide explains how short-term memory (context window), long-term memory (external knowledge stores), and working memory (real-time reasoning) work together to support coherent conversations, persistent knowledge access, and multi-step task execution.

While short-term memory maintains session continuity, long-term memory, often implemented through RAG, allows AI to retain organizational knowledge and personalize experiences across interactions. Working memory enables AI agents to combine instructions, retrieved data, and goals to reason effectively.

| Why is AI important in the banking sector? | The shift from traditional in-person banking to online and mobile platforms has increased customer demand for instant, personalized service. |

| AI Virtual Assistants in Focus: | Banks are investing in AI-driven virtual assistants to create hyper-personalised, real-time solutions that improve customer experiences. |

| What is the top challenge of using AI in banking? | Inefficiencies like higher Average Handling Time (AHT), lack of real-time data, and limited personalization hinder existing customer service strategies. |

| Limits of Traditional Automation: | Automated systems need more nuanced queries, making them less effective for high-value customers with complex needs. |

| What are the benefits of AI chatbots in Banking? | AI virtual assistants enhance efficiency, reduce operational costs, and empower CSRs by handling repetitive tasks and offering personalized interactions. |

| Future Outlook of AI-enabled Virtual Assistants: | AI will transform the role of CSRs into more strategic, relationship-focused positions while continuing to elevate the customer experience in banking. |

As large language models (LLMs) like GPT-4o / GPT-5, Claude 3.5, and Gemini 2.0 evolve, understanding how LLM memory works becomes crucial for developers, researchers, and AI practitioners. LLM memory refers to the mechanisms that enable these models to retain context, recall information, and perform complex reasoning, transforming stateless text predictors into intelligent, context-aware systems.

This comprehensive 2026 guide breaks down:

Understanding how AI memory works is therefore essential for anyone building AI agents, copilots, or conversational interfaces. This guide explains the different types of memory used in LLM systems and how they work together to create more reliable and capable AI.

LLM memory refers to the mechanisms that enable Large Language Models to store, manage, and retrieve information to maintain context, reduce hallucinations, and provide coherent, personalized, or persistent responses. It is broadly divided into short-term (context window) and long-term (external databases) systems that enhance AI functionality.

In practical terms, memory allows an AI system to maintain conversational continuity, retrieve knowledge when needed, and provide responses that feel consistent and informed by past interactions. Without memory, even the most advanced model would treat every user input as an isolated request.

AI memory is generally understood through three primary categories: short-term memory, long-term memory, and working memory. Each of these plays a distinct role in enabling intelligent behavior.

Short-term memory in LLMs is implemented through what’s called the context window. This is the temporary space where the model “sees” and processes information while generating a response. Unlike human memory, the model does not actively remember, everything it knows in a given moment must exist inside this window.

How It Works: Every time a user sends a message, the AI does not remember past conversations by default. Instead, the system re-sends previous messages, along with system instructions and retrieved data, into the model as part of a single prompt.

This combined input is what the model treats as “memory.”

The context window has a fixed size measured in tokens (pieces of words). Depending on the model, this could range from a few thousand to hundreds of thousands of tokens.

As new information is added to the prompt, the window can fill up. When the limit is reached, something must be removed. Most systems handle this by:

Once information falls outside the context window, the model has zero awareness of it. It is not forgotten, it simply no longer exists from the model’s perspective.

This is why short-term memory is:

Why It Matters: Without short-term memory, conversations would feel disconnected. Every new input would be treated as if it were the first interaction. For example, if a user asks:

“What is my account balance?”

“Now show the last three transactions.”

The AI must remember the first question to answer the second correctly.

For example, a customer support chatbot in a banking application needs to remember user inputs within the same session. It must retain details like account type, previous queries, and authentication context during the conversation.

Long-term memory allows AI systems to retain information across multiple sessions. Unlike short-term memory, this information is stored outside the LLM itself using external systems such as:

Long-term memory enables persistent knowledge retrieval.

How It Works: Modern AI applications commonly use Retrieval-Augmented Generation (RAG) to connect LLMs with stored data.

When a user asks a question:

Why It Matters: Long-term memory allows AI systems to:

Without long-term memory, users would need to repeat the same information every time.

For example, an insurance support assistant can recall policy details or previous claims even if the customer returns days later. Instead of repeating information, the user experiences a conversation that feels persistent and personalized.

In enterprise settings, long-term memory also enables AI systems to act as knowledge access layers, helping employees retrieve relevant documents, policies, or historical decisions quickly.

Working memory refers to the active processing space where an AI system performs reasoning, planning, and decision-making. It is not just about storing information, it is about using that information in real time. Working memory combines:

Working memory is particularly important for multi-step tasks such as comparing options, analyzing data, or generating structured outputs. It enables the system to track progress, maintain intermediate results, and adjust its strategy as new information becomes available.

For example, an enterprise procurement assistant evaluating vendors must consider pricing, compliance requirements, and historical performance simultaneously. Handling these multiple variables requires a dynamic processing layer that continuously updates the state of the task.

As AI agents become more autonomous, the importance of working memory continues to grow.

Even the most advanced AI systems don’t have perfect memory. In real-world deployments, teams must design around a few common limitations.

It’s not always that the AI completely forgets earlier information, sometimes it simply stops paying attention to parts of a long conversation.

The Issue:

Imagine you are chatting with an AI support assistant for 20–30 messages. Early in the conversation you mention your account type and location. Later, after many messages, the AI may answer correctly about recent questions but ignore details you shared halfway through the conversation.

For example:

User earlier: “I have a premium savings account.”

Later question: “What benefits do I get?”

If that information sits in the middle of a long prompt, the AI might respond with generic benefits instead of premium-specific ones.

The Fix:

Modern AI systems reorganize or highlight important context so it appears at the beginning or end of the prompt, where models naturally focus more attention.

Better memory improves responses, but it also increases infrastructure cost.

The Issue:

If a chatbot keeps sending the entire conversation history every time you ask something new, the system processes more and more tokens with each message. Over long sessions, this slows response time and increases API costs significantly.

For example, a customer support chat that includes 50 previous messages must resend all of them every time the user types a new question.

The Fix:

Techniques like KV(key-value)-Caching store previously processed tokens so the system doesn’t need to recompute everything again. This helps maintain context without repeatedly paying the full compute cost.

Long-term memory systems depend on retrieving stored information, but retrieval is not always perfectly relevant.

The Issue:

Suppose a user asks:

“How do I cancel my account?”

The memory system might retrieve an unrelated past conversation where another user complained angrily about cancellations. The AI could mistakenly assume the current user is frustrated and respond with unnecessary apologies or incorrect steps.

The Fix:

Reranking models evaluate retrieved information before it is passed to the AI. This ensures that only context that directly answers the user’s current question is used.

When AI memory systems are implemented effectively, they dramatically improve reliability and usefulness.

AI applications become capable of maintaining coherent conversations across extended interactions, reducing the need for users to repeat information. Memory also helps ground responses in factual data, which can significantly reduce hallucinations.

Persistent memory enables personalization, allowing AI systems to adapt responses based on user preferences or historical context. This creates more consistent and relevant experiences over time.

At an organizational level, strong memory architecture allows AI solutions to scale across departments and workflows. Many production deployments find that memory is the defining factor separating a basic chatbot from a dependable AI assistant capable of handling complex tasks.

AI memory systems play a foundational role in transforming LLMs from stateless generators into context-aware, intelligent systems.

Short-term memory enables continuity within active interactions. Long-term memory provides persistent access to knowledge across sessions. Working memory supports reasoning and multi-step execution.

Together, these layers allow AI applications to deliver more reliable, personalized, and scalable experiences.

As organizations continue integrating AI into core processes, the effectiveness of memory architecture will increasingly determine how capable and trustworthy these systems become.

At Fluid AI, memory is treated as a core architectural capability, not an afterthought. Our enterprise AI platform combines short-term context handling, long-term knowledge retrieval, and working memory for reasoning, enabling the development of secure, scalable, and production-ready AI agents for real business.

Fluid AI is an AI company based in Mumbai. We help organizations kickstart their AI journey. If you’re seeking a solution for your organization to enhance customer support, boost employee productivity and make the most of your organization’s data, look no further.

Take the first step on this exciting journey by booking a Free Discovery Call with us today and let us help you make your organization future-ready and unlock the full potential of AI for your organization.

AI-powered WhatsApp community for insights, support, and real-time collaboration.

.webp)

.webp)

Join leading businesses using the

Agentic AI Platform to drive efficiency, innovation, and growth.

AI-powered WhatsApp community for insights, support, and real-time collaboration.